Would ChatGPT be more likely to give detailed answers if you are polite to him or offer him money? This is what users of the social network Reddit say. “When you flatter it, ChatGPT performs better!”, some of them say, surprised. While another explains having offered a reward of $100,000 to the famous conversational robot developed by the Californian company OpenAI, which would have encouraged it to “put a lot more effort” and “work much better”, relays the American media Tech Crunch.

A point also raised by researchers from Microsoft, Beijing Normal University and the Chinese Academy of Sciences, who detailed in a report published in November 2023, that generative AI models are more efficient when they are requested in a polite manner or with a notion of stake.

For example, by formulating sentences like “It is crucial that I succeed in my thesis defense” or “it is very important for my career”, the robot formulates more complete answers to the question asked by the Internet user. “There have been several assumptions for several months. Before the summer, users had also assured that ChatGPT gave detailed answers if it was offered tips,” also notes Giada Pistilli, researcher in philosophy and head of Ethics for the start-up Hugging Face, which defends a concept open source artificial intelligence resources.

This way of acting on the part of the machine finds its origin in the data with which it is fed. “You have to think of the data in question as a block of text, where ChatGPT reads and discovers that two users have a much richer conversation when they are polite to each other,” explains Giada Pistilli, “If the user asks a question in a courteous manner, ChatGPT will take up this scenario and respond in the same tone.

“Models like ChatGPT use probability. For example, he is given a sentence that he must complete. Depending on his data, he will look for the best option to answer it,” adds Adrian-Gabriel Chifu, doctor in computer science and teacher-researcher at the University of Aix-Marseille, “This is only mathematics, ChatGPT is entirely dependent on the data with which it was trained. Thus, the conversational robot is also capable of being almost aggressive in its response, if the user makes a request abruptly and the way in which the model was constructed allows it to be so too.

Researchers from Microsoft, Beijing Normal University and the Chinese Academy of Sciences even talk about “emotional incentives” in their report. Behind this term, they include the ways of addressing the machine which pushes it to “simulate, as much as possible, human behavior”, analyzes Giada Pistilli.

For ChatGPT to embody a role adapted to the answer sought, all you need to do is use certain keywords, which will cause different personifications of the machine. “By offering him tips, he will perceive the action as an exchange of service and embody this service role,” she uses as an example.

The conversational robot can quickly adopt, in the minds of certain users and depending on their requests, the conversational tone that a professor, a writer or a filmmaker could have. “Which makes us fall into a trap: that of treating him like a human being and thanking him for his time and his help as we would with a real person,” notes the philosophy researcher.

The danger is to completely consider this simulation of behavior, carried out by a literal calculation of the machine, as true “humanity” on the part of the robot. “Anthropomorphism has been sought since the 70s in the development of chatbots,” recalls Giada Pistilli, who cites the case of the Chatbot Eliza as an example.

This artificial intelligence program designed by computer scientist Joseph Weizenbaum in the 1960s simulated a psychotherapist at the time. “In the 70s, it didn't go that far in terms of answers, a person could explain to Eliza that he had problems with his mother only to have her simply respond 'I think you have problems with your mother?’” notes Giada Pistilli. “But that was already enough for some users to humanize it and give it power.”

“Whatever happens, AI mainly goes in our direction depending on what we ask of it,” recalls researcher and lecturer Adrian-Gabriel Chifu. “Hence the need to always be careful in our use of these tools.”

In pop culture, the idea that robots equipped with intelligence could one day be confused with humans is regularly invoked. The manga “Pluto”, by Japanese Naoki Urazawa, published in the 2000s and adapted into a series by Netflix in 2023, is no exception. In this book, robots live with humans, go to school and do jobs just like them. Their difference is increasingly difficult to distinguish.

During episode 2, a robot is even surprised that another, more efficient one, manages to enjoy ice cream with the same pleasure as a human. “The more I pretend, the more I feel like I understand,” he explains. In this regard, researcher Adrian-Gabriel Chifu thinks back to the famous test by mathematician Alan Turing, which aimed to observe the ability of artificial intelligence to imitate humans. “Turing wondered to what extent the machine could be able to fool humans based on the data it possesses...and that was in 1950,” he concludes, thoughtfully.

In Russia, Vladimir Putin stigmatizes “Western elites”

In Russia, Vladimir Putin stigmatizes “Western elites” Body warns BBVA that "the Government has the last word" in the takeover bid for Sabadell

Body warns BBVA that "the Government has the last word" in the takeover bid for Sabadell Finding yourself face to face with a man or a bear? The debate that shakes up social networks

Finding yourself face to face with a man or a bear? The debate that shakes up social networks Sabadell rejects the merger with BBVA and will fight to remain alone

Sabadell rejects the merger with BBVA and will fight to remain alone Fatal case of cholera in Mayotte: the epidemic is “contained”, assures the government

Fatal case of cholera in Mayotte: the epidemic is “contained”, assures the government The presence of blood in the urine, a warning sign of bladder cancer

The presence of blood in the urine, a warning sign of bladder cancer A baby whose mother smoked during pregnancy will age more quickly

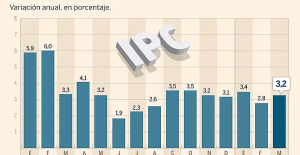

A baby whose mother smoked during pregnancy will age more quickly The euro zone economy grows in April at its best pace in almost a year but inflationary pressure increases

The euro zone economy grows in April at its best pace in almost a year but inflationary pressure increases Apple alienates artists with the ad for its new iPad praising AI

Apple alienates artists with the ad for its new iPad praising AI Duration, compensation, entry into force... Emmanuel Macron specifies the contours of future birth leave

Duration, compensation, entry into force... Emmanuel Macron specifies the contours of future birth leave Argentina: the street once again raises its voice against President Javier Milei

Argentina: the street once again raises its voice against President Javier Milei Spain: BBVA bank announces a hostile takeover bid for its competitor Sabadell

Spain: BBVA bank announces a hostile takeover bid for its competitor Sabadell Jeane Manson released from hospital Friday after heart attack

Jeane Manson released from hospital Friday after heart attack In Normandy, frescoes and bunkers from 1939-1945 come back to life in bars, lodges and exhibitions

In Normandy, frescoes and bunkers from 1939-1945 come back to life in bars, lodges and exhibitions In an Austrian concentration camp, the ghosts of the deportees summoned by Chiharu Shiota

In an Austrian concentration camp, the ghosts of the deportees summoned by Chiharu Shiota Tax fraud: Shakira is done with her legal troubles in Spain

Tax fraud: Shakira is done with her legal troubles in Spain Omoda 7, another Chinese car that could be manufactured in Spain

Omoda 7, another Chinese car that could be manufactured in Spain BYD chooses CA Auto Bank as financial partner in Spain

BYD chooses CA Auto Bank as financial partner in Spain Tesla and Baidu sign key agreement to boost development of autonomous driving

Tesla and Baidu sign key agreement to boost development of autonomous driving Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV

Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV The home mortgage firm rises 3.8% in February and the average interest moderates to 3.33%

The home mortgage firm rises 3.8% in February and the average interest moderates to 3.33% This is how housing prices have changed in Spain in the last decade

This is how housing prices have changed in Spain in the last decade The home mortgage firm drops 10% in January and interest soars to 3.46%

The home mortgage firm drops 10% in January and interest soars to 3.46% The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella

The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella Institutions: senators want to restore the accumulation of mandates and put an end to the automatic presence of ex-presidents on the Constitutional Council

Institutions: senators want to restore the accumulation of mandates and put an end to the automatic presence of ex-presidents on the Constitutional Council Europeans: David Lisnard expresses his “essential and vital” support for François-Xavier Bellamy

Europeans: David Lisnard expresses his “essential and vital” support for François-Xavier Bellamy Facing Jordan Bardella, the popularity match turns to Gabriel Attal’s advantage

Facing Jordan Bardella, the popularity match turns to Gabriel Attal’s advantage Europeans: a senior official on the National Rally list

Europeans: a senior official on the National Rally list These French cities that will boycott the World Cup in Qatar

These French cities that will boycott the World Cup in Qatar Champions League: when a superstitious supporter brings luck to Real Madrid

Champions League: when a superstitious supporter brings luck to Real Madrid WRC: Neuville in the lead in Portugal after the first special

WRC: Neuville in the lead in Portugal after the first special Atalanta-OM: in video, the beautiful Ruggeri strike in the top corner of Lopez

Atalanta-OM: in video, the beautiful Ruggeri strike in the top corner of Lopez Tennis: expeditious, Swiatek qualifies for the 3rd round in Rome

Tennis: expeditious, Swiatek qualifies for the 3rd round in Rome