Jonathan Zittrain is the director of the Berkman Center Klein Internet and Society at Harvard University, whose work focuses on the consequences of the Internet. Zittrain (Pittsburg, USA, 1969) is critical of the present of the great technological and believe that today we are living the technological changes with a "vague sense of perplexity," he says. In 2008 he wrote the book the future of The internet. And how to stop it. If I wrote a sequel to the titularía "Well, we tried", now believed to Zittrain.

Question. What has gone wrong?

Response. First, there is a constant streams of new enterprises, people in basements, inventing new things, but the types that first arrived have left, and have been made strong. Second, to have available networks and sensors in very cheap means that data can be collected at all hours. Third, with tools that are not especially new, but they now have the data to Casino siteleri be much more sharp, can be made judgements about people, and discover how to intervene in their lives in ways that before were unknown.

Q. Not everything has been terrible in these years.

A. At least not occurred some of the things that we worry about. For example, with the copyright.

P. Napster, the web to exchange files, died.

"Today, I offer you a loan at 25% interest weekly because I identify as someone emotionally delicate"

R. For example. It was interesting to see in the copyright war between the thesis "with Napster all it is uncontrollable and all content industries are going to bankruptcy" and for another "everything will be restricted by the technology, nobody will be able to make a copy or pay nothing". It is interesting to see that in these 10 years this war has been diluted. Now for some money or look at an advertisement, you can see what you want. So, some of our concerns about the freedom of the mind, which in the end is how you could describe the copyright, did not occur.

Q. Among the problems, is the privacy.

A. It's something that 10 years ago I wasn't worried about so much: that a company knew that I had a dog and show me ads of dog food instead of cat it seemed painless. But today I offer you a loan at 25% interest weekly because I identify as someone emotionally delicate, that you just lose money, and can figure out when to attack and when they attack they only do it against me.

Q. We are sure that this happens?

A. Absolutely. 10 years ago I supported the position of the major platforms when they said, 'we are the only window to the Internet, we do not culpéis if you don't like what you see'. There is still some truth in that, but increasingly they are not mere windows. They have become so large that they decide which pieces they teach. We are looking less something specific by asking questions and we spent more time receiving unsolicited advice from Siri, Google Assistant, or Alexa, you are basically presented to us as "friends", tell us what is the best way from here to the cinema, and when they say "best" we do not know if it is because it goes to the side of the pizzeria that offers them something for taking me to your door.

Q. could Already be happening.

"twice a week the car will appear at your house and take you to an 'adventure esponsorizada"

A. it Is useful to think of what would happen if the model of online business happen to real. The great challenge of autonomous cars is to make one that drive well. But the time will come when you will have to ask themselves how to pay. If you use the economy model online would be: you can upload in an autonomous car, it's free, but you may stop at a fast food restaurant before arriving at your destination and wait there for 10 minutes. You can continue sitting in the car or get out, stretch your legs and buy a hamburger. If you are in Asyabahis a hurry, it is ok, no problem, but to keep your subscription, two times per week the car will appear at your house and take you to an 'adventure esponsorizada'. These are things that do not now have an analogy in the real world, but the artificial intelligence and big data will make possible. Do we want that world? I think that it is fair to ask us these questions now, instead of saying, 'we're Going to build it and we'll see'.

Q. Has raised this doubt: What happens if the police issued a search warrant of someone that goes inside of an autonomous car: the car should close doors and go to the police station or not?

A. it Is good to ask that. It is a way of showing us that this is not just a replacement for the drivers, but rather opens up new possibilities. In that sense it's exciting. But how we govern all of this? Could be what was called in my book "the principle of procrastination". The theory was not trying to solve every problem already, but to leave to develop the technology and to solve it then. For me it was then a good principle, but now it looks that it is very difficult because the distance between is too soon to know and it is already too late to do something is very short. At least we should have a public debate where you will see these options. We would see that affecting the real world suddenly a lot of barriers to the regulation that we saw on the internet they fall.

Q. The platforms because they decide if we see more or less disinformation. Do you have any remedy?

", a platform to determine what experience 2,000 million people is too big a responsibility"

A. The answers that seem most obvious are drugs so strong that they can be more dangerous than the disease. A platform to determine what experience 2,000 million people around the world is too big a responsibility, no matter how noble they try to be. We need to change the premise of the question. It could be something as dramatic as bust the company, and create a lot smaller, it could be that the company opened layers of his operation so that anybody could write a recipe to create a newsfeed –what we see– in Facebook. It depends not only on Facebook what is on my screen. That could lead people to choose recipes that reinforce their opinions, which could be a hazard, but would be less than the danger that we all see the world from the same place.

Q. And their algorithms.

A. The technology must be loyal to the people and do not offer a hamburger and fries every 5 seconds. I have worked in a theory with Jack Balkin of Yale on 'trust the information'. It is a word exaggerated, but it means that when you trust so much information to one of these platforms, they must be loyal. If your interest conflicts with theirs, yours must master. That means that they can show you ads, but not ads that you may impair.

Q. Is it director the Berkman Institute. Is dedicated to see the internet. When you go to Silicon Valley, how do you treat it?

A. I don't have the feeling that the leaders there think that I have something to tell you that they have not thought of before. Sit in a meeting room and to create more ready. But they know that they have a persistent problem of public relations that is growing in a possible wave that I would like to avoid. See european regulation as something that can force it to see that the regulation can exist and not be catastrophic. Looking for ways to pre-empt these issues before they expect to liarla even more and be regulated. Then they can realize that we don't need a new function in its software but you need a new dimension to political, cultural, legal for what they do. I have seen them more open to ideas and suggestions in the last six months than in the previous five years.

Q. To the big companies?

A. Yes, the great technology they have hangover as a result of its expansion. They have also now a lot of money and want to be able to convert that wealth into a better environment and some of them actually say to themselves that they want to improve the world.

Hamas-Israel war: US begins construction of pier in Gaza

Hamas-Israel war: US begins construction of pier in Gaza Israel prepares to attack Rafah

Israel prepares to attack Rafah Indifference in European capitals, after Emmanuel Macron's speech at the Sorbonne

Indifference in European capitals, after Emmanuel Macron's speech at the Sorbonne Spain: what is Manos Limpias, the pseudo-union which denounced the wife of Pedro Sánchez?

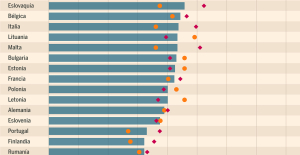

Spain: what is Manos Limpias, the pseudo-union which denounced the wife of Pedro Sánchez? Spain is the country in the European Union with the most overqualified workers for their jobs

Spain is the country in the European Union with the most overqualified workers for their jobs Parvovirus alert, the “fifth disease” of children which has already caused the death of five babies in 2024

Parvovirus alert, the “fifth disease” of children which has already caused the death of five babies in 2024 Colorectal cancer: what to watch out for in those under 50

Colorectal cancer: what to watch out for in those under 50 H5N1 virus: traces detected in pasteurized milk in the United States

H5N1 virus: traces detected in pasteurized milk in the United States Private clinics announce a strike with “total suspension” of their activities, including emergencies, from June 3 to 5

Private clinics announce a strike with “total suspension” of their activities, including emergencies, from June 3 to 5 The Lagardère group wants to accentuate “synergies” with Vivendi, its new owner

The Lagardère group wants to accentuate “synergies” with Vivendi, its new owner The iconic tennis video game “Top Spin” returns after 13 years of absence

The iconic tennis video game “Top Spin” returns after 13 years of absence Three Stellantis automobile factories shut down due to supplier strike

Three Stellantis automobile factories shut down due to supplier strike A pre-Roman necropolis discovered in Italy during archaeological excavations

A pre-Roman necropolis discovered in Italy during archaeological excavations Searches in Guadeloupe for an investigation into the memorial dedicated to the history of slavery

Searches in Guadeloupe for an investigation into the memorial dedicated to the history of slavery Aya Nakamura in Olympic form a few hours before the Flames ceremony

Aya Nakamura in Olympic form a few hours before the Flames ceremony Psychiatrist Raphaël Gaillard elected to the French Academy

Psychiatrist Raphaël Gaillard elected to the French Academy Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV

Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV Tesla launches a new Model Y with 600 km of autonomy at a "more accessible price"

Tesla launches a new Model Y with 600 km of autonomy at a "more accessible price" The 10 best-selling cars in March 2024 in Spain: sales fall due to Easter

The 10 best-selling cars in March 2024 in Spain: sales fall due to Easter A private jet company buys more than 100 flying cars

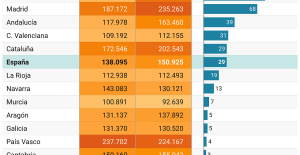

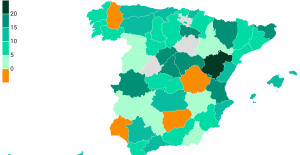

A private jet company buys more than 100 flying cars This is how housing prices have changed in Spain in the last decade

This is how housing prices have changed in Spain in the last decade The home mortgage firm drops 10% in January and interest soars to 3.46%

The home mortgage firm drops 10% in January and interest soars to 3.46% The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella

The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella Rental prices grow by 7.3% in February: where does it go up and where does it go down?

Rental prices grow by 7.3% in February: where does it go up and where does it go down? “Deadly Europe”, “economic decline”, immigration… What to remember from Emmanuel Macron’s speech at the Sorbonne

“Deadly Europe”, “economic decline”, immigration… What to remember from Emmanuel Macron’s speech at the Sorbonne Sale of Biogaran: The Republicans write to Emmanuel Macron

Sale of Biogaran: The Republicans write to Emmanuel Macron Europeans: “All those who claim that we don’t need Europe are liars”, criticizes Bayrou

Europeans: “All those who claim that we don’t need Europe are liars”, criticizes Bayrou With the promise of a “real burst of authority”, Gabriel Attal provokes the ire of the opposition

With the promise of a “real burst of authority”, Gabriel Attal provokes the ire of the opposition These French cities that will boycott the World Cup in Qatar

These French cities that will boycott the World Cup in Qatar Judo: Blandine Pont European vice-champion

Judo: Blandine Pont European vice-champion Swimming: World Anti-Doping Agency appoints independent prosecutor in Chinese doping case

Swimming: World Anti-Doping Agency appoints independent prosecutor in Chinese doping case Water polo: everything you need to know about this sport

Water polo: everything you need to know about this sport Judo: Cédric Revol on the 3rd step of the European podium

Judo: Cédric Revol on the 3rd step of the European podium