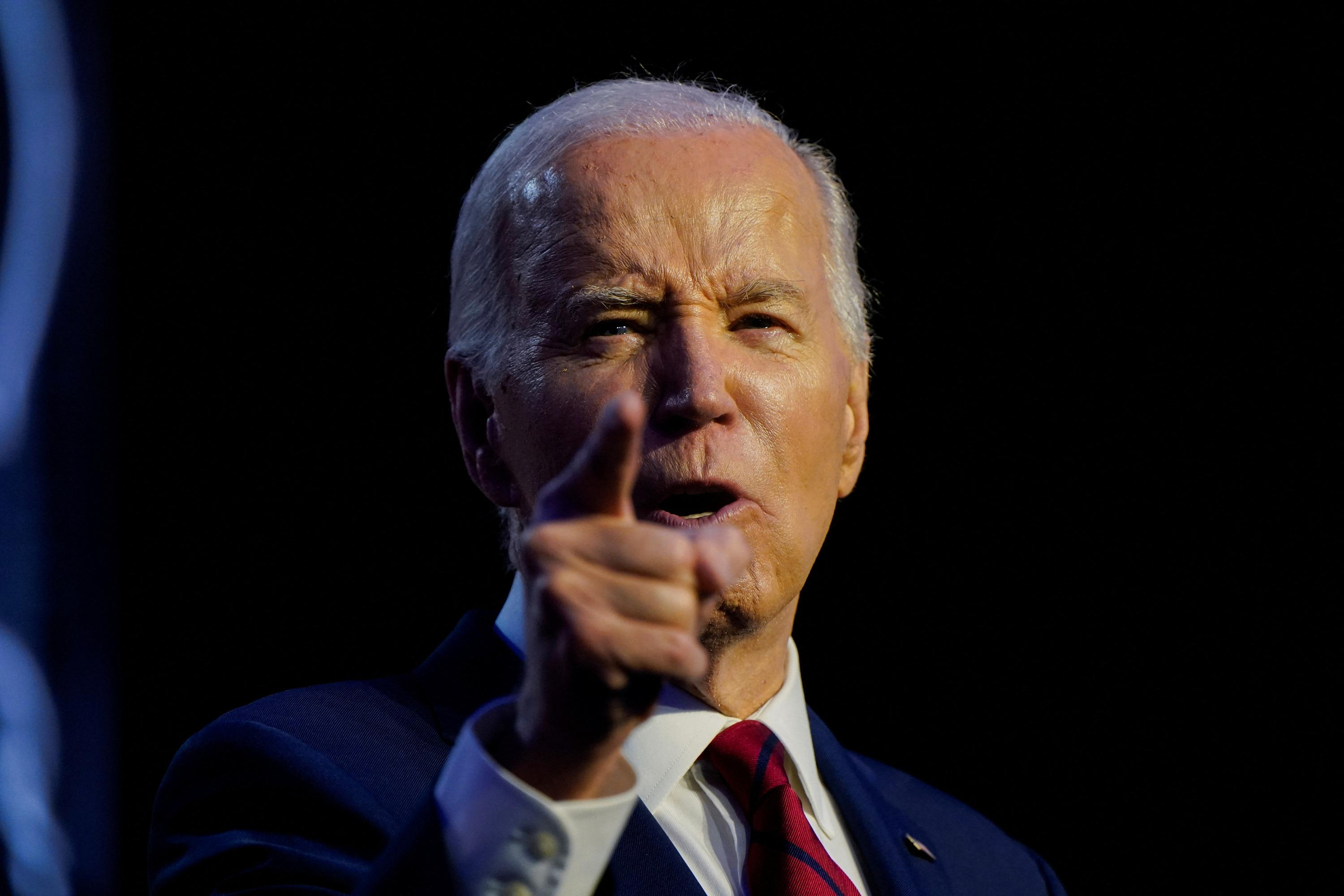

Do good in connecting people with people. Between the principle display, and the reality created by the algorithms of Facebook, the gap is wide and deep. Studies conducted in-house, around the years 2017 and 2018, have starkly demonstrated to the leaders of the social network. But they have minimized the scope of the proposed solutions, when they have not refused net, reports the Wall Street Journal. Anything to weaken the scope of the recent attacks from Donald Trump, to that Facebook - and social networks in general - are against the content republicans.

"The myth of the most resistant about Facebook is that the social network was not aware of the damage that it creates," commented on Twitter the New York Times columnist Kevin Roose. "But in reality, he always knew what they were and the harm it could do to society." And for good reason: the network had all the data on the behavior of users at its disposal, on the contrary, researchers external to the company.

Thus, a sociologist, employee at Facebook was seen as early as 2016 that there was a problem. She had found that a third of the groups carrying on the German policy, which was broadcasting racist content, conspiracy and pro-Russian. These groups had a disproportionate influence thanks to a few of their members hyperactive. The study had even shown that "64% of people who join extremist groups have done so because of our algorithms of recommendation". In conclusion, the sociologist warned that "our recommendation system feeds the problem".

Between 2017 and 2018, a team of engineers and researchers has been established internally to deal with this problem. It was called "Common ground" ("ground"). Other entities called "Integrity teams" ("team Integrity") have also been put in place. The proposed policy was not to encourage people to change opinion, but to promote the content that creates "empathy and understanding".

Problems, suggestions for improvement, such as expanding the circle of recommendations, would have decreased "engagement" of users. In a word, they would have been less active on the social network. The team "Common ground" had taken her side and had qualified himself his proposals "anti-growth", calling on Facebook to "adopt a posture of moral".

Fear of criticism from the rightOther discovery researchers from Facebook: the presence much more imposing on the network of the publications of the extreme right wing, compared to the groups of the extreme left. In this context, take steps to reduce the titles "traps click" would have disadvantaged these sites ultraconservateurs. The proposals of the team "Common Ground" have been rejected, the leaders of Facebook fear of criticism from the right for censorship.

A proposal has been partially followed, however: minimize a little the influence of users hyperactive. In fact, some of the profiles, spent around 20 hours per day on the network - probably fake profiles of political propaganda - and were thus favors algorithms of Facebook. Pushed to take action, especially by the scandal in Cambridge Analytica, the american giant has since taken other steps, among which to promote the content shared by a wide user base, and not only activists, or, on the contrary, the disadvantage of online sources spreading false news.

We are no longer the same company

A spokesperson for Facebook.

"We are no longer the same company, defended a spokesperson for Facebook cited by the Wall Street Journal. We have learned a lot since 2016, we created a big team of "integrity" and strengthened our policies to limit the spread of content that is hateful." In February, Facebook has also launched a fund of $ 2 million to fund academic studies on the effects of Facebook on the polarization of the society.

SERVICES: save money using a promo code Canon

The editorial team conseilleHarcèlement, pornography,... The big problems of moderation of the French application YuboComment books conspiracy take advantage of the algorithms AmazonPeut we accuse Facebook of censoring or amplify the movement of the "yellow vests"?SujetsFacebookRéseau socialAucun comment there are currently no comments on this article.

Be the first to give your opinion !

United States: divided on the question of presidential immunity, the Supreme Court offers respite to Trump

United States: divided on the question of presidential immunity, the Supreme Court offers respite to Trump Maurizio Molinari: “the Scurati affair, a European injury”

Maurizio Molinari: “the Scurati affair, a European injury” Hamas-Israel war: US begins construction of pier in Gaza

Hamas-Israel war: US begins construction of pier in Gaza Israel prepares to attack Rafah

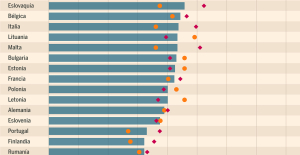

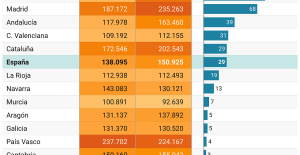

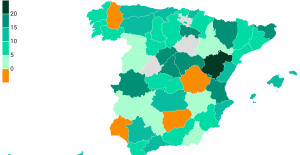

Israel prepares to attack Rafah Spain is the country in the European Union with the most overqualified workers for their jobs

Spain is the country in the European Union with the most overqualified workers for their jobs Parvovirus alert, the “fifth disease” of children which has already caused the death of five babies in 2024

Parvovirus alert, the “fifth disease” of children which has already caused the death of five babies in 2024 Colorectal cancer: what to watch out for in those under 50

Colorectal cancer: what to watch out for in those under 50 H5N1 virus: traces detected in pasteurized milk in the United States

H5N1 virus: traces detected in pasteurized milk in the United States Private clinics announce a strike with “total suspension” of their activities, including emergencies, from June 3 to 5

Private clinics announce a strike with “total suspension” of their activities, including emergencies, from June 3 to 5 The Lagardère group wants to accentuate “synergies” with Vivendi, its new owner

The Lagardère group wants to accentuate “synergies” with Vivendi, its new owner The iconic tennis video game “Top Spin” returns after 13 years of absence

The iconic tennis video game “Top Spin” returns after 13 years of absence Three Stellantis automobile factories shut down due to supplier strike

Three Stellantis automobile factories shut down due to supplier strike A pre-Roman necropolis discovered in Italy during archaeological excavations

A pre-Roman necropolis discovered in Italy during archaeological excavations Searches in Guadeloupe for an investigation into the memorial dedicated to the history of slavery

Searches in Guadeloupe for an investigation into the memorial dedicated to the history of slavery Aya Nakamura in Olympic form a few hours before the Flames ceremony

Aya Nakamura in Olympic form a few hours before the Flames ceremony Psychiatrist Raphaël Gaillard elected to the French Academy

Psychiatrist Raphaël Gaillard elected to the French Academy Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV

Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV Tesla launches a new Model Y with 600 km of autonomy at a "more accessible price"

Tesla launches a new Model Y with 600 km of autonomy at a "more accessible price" The 10 best-selling cars in March 2024 in Spain: sales fall due to Easter

The 10 best-selling cars in March 2024 in Spain: sales fall due to Easter A private jet company buys more than 100 flying cars

A private jet company buys more than 100 flying cars This is how housing prices have changed in Spain in the last decade

This is how housing prices have changed in Spain in the last decade The home mortgage firm drops 10% in January and interest soars to 3.46%

The home mortgage firm drops 10% in January and interest soars to 3.46% The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella

The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella Rental prices grow by 7.3% in February: where does it go up and where does it go down?

Rental prices grow by 7.3% in February: where does it go up and where does it go down? Even on a mission for NATO, the Charles-de-Gaulle remains under French control, Lecornu responds to Mélenchon

Even on a mission for NATO, the Charles-de-Gaulle remains under French control, Lecornu responds to Mélenchon “Deadly Europe”, “economic decline”, immigration… What to remember from Emmanuel Macron’s speech at the Sorbonne

“Deadly Europe”, “economic decline”, immigration… What to remember from Emmanuel Macron’s speech at the Sorbonne Sale of Biogaran: The Republicans write to Emmanuel Macron

Sale of Biogaran: The Republicans write to Emmanuel Macron Europeans: “All those who claim that we don’t need Europe are liars”, criticizes Bayrou

Europeans: “All those who claim that we don’t need Europe are liars”, criticizes Bayrou These French cities that will boycott the World Cup in Qatar

These French cities that will boycott the World Cup in Qatar Archery: everything you need to know about the sport

Archery: everything you need to know about the sport Handball: “We collapsed”, regrets Nikola Karabatic after PSG-Barcelona

Handball: “We collapsed”, regrets Nikola Karabatic after PSG-Barcelona Tennis: smash, drop shot, slide... Nadal's best points for his return to Madrid (video)

Tennis: smash, drop shot, slide... Nadal's best points for his return to Madrid (video) Pro D2: Biarritz wins a significant success in Agen and takes another step towards maintaining

Pro D2: Biarritz wins a significant success in Agen and takes another step towards maintaining