Gemma Galdon, an analyst and researcher, founded Eticas Consulting, which performs algorithm audits to help companies identify any biases in the data they use. This is done to prevent discrimination and unequal opportunities, improving transparency.

What was the inspiration for Eticas Consulting?

They continued to provide me with funding for my research even after I had finished my thesis almost ten years earlier. This financial support was rare. It wasn't often that people worked in the same area as technology or that someone so young had no permanent position at the university. They invited me to leave after I had raised the one million euros in external funding. I brought the projects home and created a legal entity in order to manage them. After I had completed them, I hired people. We continued to raise money to study the effects of technology on individuals. After several years, I decided to become more proactive and respond quickly to the demand for research to better understand the impact of technology on society.

- What were your difficulties?

We have been extremely fortunate, I always believe. We have the interest of many public and private actors, even though we don't want it. It was something I had never considered. I have been overwhelmed by the responsibilities of running an economic organization. It has also cost me a lot to find support groups that understand what it takes to be an entrepreneur woman. You are not born with the entrepreneurial spirit. He didn't always ask for money but just needed help.

- Why did it take you so long to start?

- For liability. Your projects are similar to your children. Although I didn't have any entrepreneurial spirit, I committed to finishing the investigation. These ten years have seen many challenges. Although we no longer have "do-or-die" projects, I believe that algorithmic auditing should become a standard. Bad algorithmic decisions can affect people, but it is not visible. This is why we do what we love; it is rewarding.

- How do you know if there are problems with the algorithm?

- Based on sociotechnical characteristics. Many people use technology from outside. I use it from inside. While I was aware that there were problems, I also realized that changing the way you code can eliminate those social impacts. These problems are not caused by bad faith but ignorance. The engineers were not informed that they would discriminate against specific groups if they didn't take into consideration each group.

What are the obstacles?

Biases are permanent. There is much talk about them. Algorithms are designed to discriminate and bias. It is not about who or how. Algorithms reward the most abundant norm. Bank systems, for example, are built on historical data and continue to reward men. It is determined that the man is the best client, and the woman is the most risky client by looking at the 'big data' from the past. They get ten times more credit even though the record of the woman is better and they are better payers. This statistical discrimination is not prevented by anyone cleaning the data.

These biases can be fixed. Technology or is it?

Technology is both the problem and solution. It would be wonderful if more people could learn digital skills and engineers were more humanistic. Although artificial intelligence and data are still very much a part of engineering, they should have an understanding of the social impact. Multidisciplinary teams are required to achieve this. This is something I have seen for ten years. There is a loss of trust between technology and society. It has been a great abuser of citizens. Now we need to recover our space and make better technology.

Are the people aware of this?

- Because there is so much opacity about the processes, no. It is important to know when we are being subject to automated processes. It will, I believe.

- And the companies

- Either. It is possible to have bad faith but not ignorance. Technology is believed magical. Technology is not able to make mistakes. We trust computers more than we trust humans, and we don't protect ourselves against the bad decisions made by algorithms. Some algorithms are poor quality and can lead to poor programming decisions.

Gemma Galdon has received numerous awards for her professional achievements. She says, "They are very important, but they're not enough." "More is needed for women to feel at ease," she says. "Each project is a reward for me, but we lack female ecosystems. We need more women in these positions."

While some edges can be changed quickly, others will take longer. We must be more visible, be trusted to lead and be there for other women. She claims that entrepreneurship also requires a deep understanding of women. She says, "Code ethics is dominated by women. But in Spain, innovation is not possible because it isn't like a new device." This means you can avoid a lot funding and aid.

Galdon insists that innovation is not about creating socio-technical space. She adds that many women are improving processes and thinking outside of the box. Galdon says, "And you must give visibility to that."

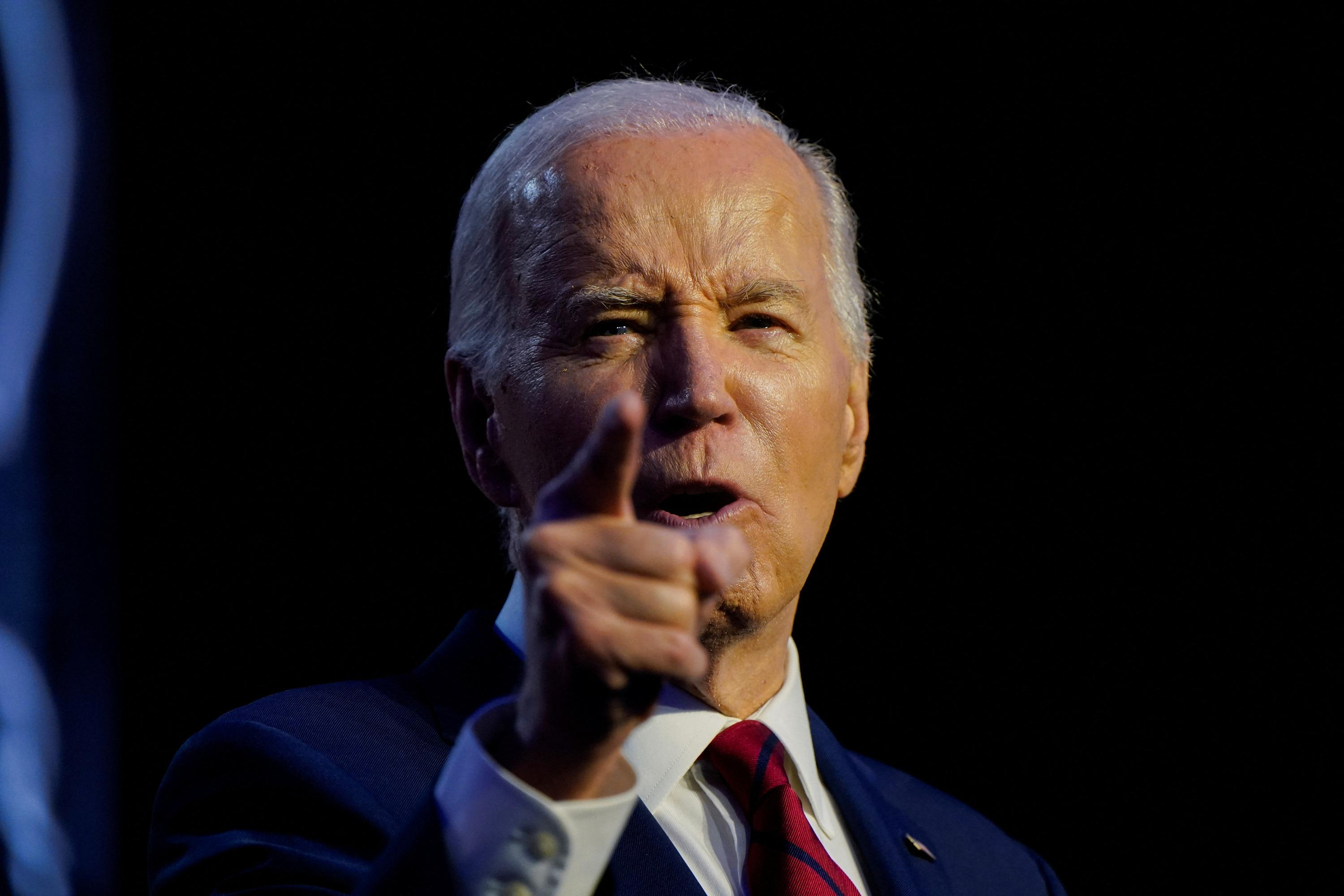

United States: divided on the question of presidential immunity, the Supreme Court offers respite to Trump

United States: divided on the question of presidential immunity, the Supreme Court offers respite to Trump Maurizio Molinari: “the Scurati affair, a European injury”

Maurizio Molinari: “the Scurati affair, a European injury” Hamas-Israel war: US begins construction of pier in Gaza

Hamas-Israel war: US begins construction of pier in Gaza Israel prepares to attack Rafah

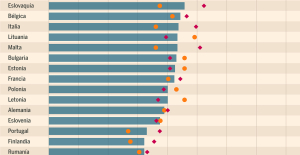

Israel prepares to attack Rafah Spain is the country in the European Union with the most overqualified workers for their jobs

Spain is the country in the European Union with the most overqualified workers for their jobs Parvovirus alert, the “fifth disease” of children which has already caused the death of five babies in 2024

Parvovirus alert, the “fifth disease” of children which has already caused the death of five babies in 2024 Colorectal cancer: what to watch out for in those under 50

Colorectal cancer: what to watch out for in those under 50 H5N1 virus: traces detected in pasteurized milk in the United States

H5N1 virus: traces detected in pasteurized milk in the United States Private clinics announce a strike with “total suspension” of their activities, including emergencies, from June 3 to 5

Private clinics announce a strike with “total suspension” of their activities, including emergencies, from June 3 to 5 The Lagardère group wants to accentuate “synergies” with Vivendi, its new owner

The Lagardère group wants to accentuate “synergies” with Vivendi, its new owner The iconic tennis video game “Top Spin” returns after 13 years of absence

The iconic tennis video game “Top Spin” returns after 13 years of absence Three Stellantis automobile factories shut down due to supplier strike

Three Stellantis automobile factories shut down due to supplier strike A pre-Roman necropolis discovered in Italy during archaeological excavations

A pre-Roman necropolis discovered in Italy during archaeological excavations Searches in Guadeloupe for an investigation into the memorial dedicated to the history of slavery

Searches in Guadeloupe for an investigation into the memorial dedicated to the history of slavery Aya Nakamura in Olympic form a few hours before the Flames ceremony

Aya Nakamura in Olympic form a few hours before the Flames ceremony Psychiatrist Raphaël Gaillard elected to the French Academy

Psychiatrist Raphaël Gaillard elected to the French Academy Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV

Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV Tesla launches a new Model Y with 600 km of autonomy at a "more accessible price"

Tesla launches a new Model Y with 600 km of autonomy at a "more accessible price" The 10 best-selling cars in March 2024 in Spain: sales fall due to Easter

The 10 best-selling cars in March 2024 in Spain: sales fall due to Easter A private jet company buys more than 100 flying cars

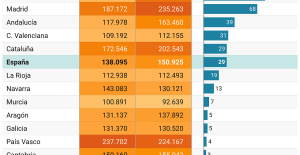

A private jet company buys more than 100 flying cars This is how housing prices have changed in Spain in the last decade

This is how housing prices have changed in Spain in the last decade The home mortgage firm drops 10% in January and interest soars to 3.46%

The home mortgage firm drops 10% in January and interest soars to 3.46% The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella

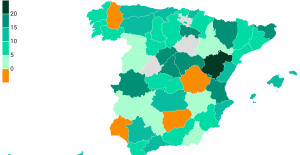

The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella Rental prices grow by 7.3% in February: where does it go up and where does it go down?

Rental prices grow by 7.3% in February: where does it go up and where does it go down? Even on a mission for NATO, the Charles-de-Gaulle remains under French control, Lecornu responds to Mélenchon

Even on a mission for NATO, the Charles-de-Gaulle remains under French control, Lecornu responds to Mélenchon “Deadly Europe”, “economic decline”, immigration… What to remember from Emmanuel Macron’s speech at the Sorbonne

“Deadly Europe”, “economic decline”, immigration… What to remember from Emmanuel Macron’s speech at the Sorbonne Sale of Biogaran: The Republicans write to Emmanuel Macron

Sale of Biogaran: The Republicans write to Emmanuel Macron Europeans: “All those who claim that we don’t need Europe are liars”, criticizes Bayrou

Europeans: “All those who claim that we don’t need Europe are liars”, criticizes Bayrou These French cities that will boycott the World Cup in Qatar

These French cities that will boycott the World Cup in Qatar Archery: everything you need to know about the sport

Archery: everything you need to know about the sport Handball: “We collapsed”, regrets Nikola Karabatic after PSG-Barcelona

Handball: “We collapsed”, regrets Nikola Karabatic after PSG-Barcelona Tennis: smash, drop shot, slide... Nadal's best points for his return to Madrid (video)

Tennis: smash, drop shot, slide... Nadal's best points for his return to Madrid (video) Pro D2: Biarritz wins a significant success in Agen and takes another step towards maintaining

Pro D2: Biarritz wins a significant success in Agen and takes another step towards maintaining