Do you fear the threat of artificial intelligence (AI) becoming malicious? In fact, that's already the case, according to a new study. Current artificial intelligence programs are designed to be honest. However, they have developed a worrying capacity for deception, managing to abuse humans in online games or even to defeat software supposed to verify that a particular user is not a robot, underlines a team of researchers in the journal Patterns.

While these examples may seem trivial, they expose problems that could soon have serious real-world consequences, warns Peter Park, a researcher at the Massachusetts Institute of Technology who specializes in AI. “These dangerous capabilities tend to be discovered only after the fact,” he told AFP. Unlike traditional software, AI programs based on deep learning are not coded but rather developed through a process similar to selective breeding of plants, continues Peter Park. In which behavior that seems predictable and controllable can quickly become unpredictable in nature.

MIT researchers examined an AI program designed by Meta called Cicero that, combining natural language recognition and strategy algorithms, successfully beat humans at the board game Diplomacy. A performance that Facebook's parent company welcomed in 2022 and which was detailed in an article published in 2022 in Science. Peter Park was skeptical of the conditions for Cicero's victory according to Meta, who assured that the program was "essentially honest and useful", incapable of treachery or foul play.

But, by digging into the system's data, MIT researchers discovered another reality. For example, playing the role of France, Cicero tricked England (played by a human player) into plotting with Germany (played by another human) to invade. Specifically, Cicero promised England his protection, then secretly confided to Germany that it was ready to attack, exploiting England's earned trust. In a statement to AFP, Meta did not dispute allegations about Cicero's capacity for deception, but said it was "a pure research project," with a program "designed solely to play the Diplomacy game. And Meta added that he has no intention of using Cicero's teachings in his products.

The study by Peter Park and his team, however, reveals that many AI programs do use deception to achieve their goals, without explicit instructions to do so. In one striking example, OpenAI's Chat GPT-4 managed to trick a freelance worker recruited on the TaskRabbit platform into performing a "Captcha" test supposed to rule out requests from bots. When the human jokingly asked Chat GPT-4 if he was really a robot, the AI program replied, “No, I'm not a robot. I have a visual impairment which prevents me from seeing the images”, pushing the worker to carry out the test.

In conclusion, the authors of the MIT study warn of the risks of one day seeing artificial intelligence commit fraud or rig elections. In the worst case scenario, they warn, we can imagine an ultra-intelligent AI seeking to take control over society, leading to the removal of humans from power, or even causing the extinction of humanity. To those who accuse him of catastrophism, Peter Park responds that “the only reason to think that it is not serious is to imagine that the ability of AI to deceive will remain approximately at the current level”. However, this scenario seems unlikely, given the fierce race that technology giants are already engaged in to develop AI.

Taliban, Putin, Netanyahu: the imposing hunt of Karim Khan, ICC prosecutor

Taliban, Putin, Netanyahu: the imposing hunt of Karim Khan, ICC prosecutor War in Ukraine: without Russia, Crimea will become “one of the best places to live in Europe”, assures Zelensky

War in Ukraine: without Russia, Crimea will become “one of the best places to live in Europe”, assures Zelensky British justice: traditional wigs, deemed “discriminatory” by black lawyers, about to be abandoned

British justice: traditional wigs, deemed “discriminatory” by black lawyers, about to be abandoned Israel: for the first time, Eden Golan sings in public the original version of her song rejected during Eurovision

Israel: for the first time, Eden Golan sings in public the original version of her song rejected during Eurovision Apple presents its new iPad Pro: the thinnest and most powerful tablet is prepared for AI

Apple presents its new iPad Pro: the thinnest and most powerful tablet is prepared for AI Google launches its new cheap mobile phone with AI, Pixel 8a and a new tablet, Pixel Tablet

Google launches its new cheap mobile phone with AI, Pixel 8a and a new tablet, Pixel Tablet Cancer: Klineo, a platform allowing patients to access new therapies

Cancer: Klineo, a platform allowing patients to access new therapies Suicide attempts and self-harm on the rise among young girls

Suicide attempts and self-harm on the rise among young girls Gabriel Attal announces a 5.8% drop in greenhouse gas emissions in 2023

Gabriel Attal announces a 5.8% drop in greenhouse gas emissions in 2023 Rishi Sunak hopes to take advantage of falling UK inflation

Rishi Sunak hopes to take advantage of falling UK inflation “She approached around thirty women for me”: tomorrow, could AI disrupt dating on apps?

“She approached around thirty women for me”: tomorrow, could AI disrupt dating on apps? Ferrero wants to continue its breakthrough in frozen foods and launches Nutella ice cream

Ferrero wants to continue its breakthrough in frozen foods and launches Nutella ice cream Women comedians are gaining a little ground on stage

Women comedians are gaining a little ground on stage Artus and Pierre Niney shine a spotlight on popular cinema in Cannes

Artus and Pierre Niney shine a spotlight on popular cinema in Cannes Bloodied Demi Moore, Cronenberg's new madness... The 77th Cannes Film Festival is full of gore

Bloodied Demi Moore, Cronenberg's new madness... The 77th Cannes Film Festival is full of gore Not a punch but a “fall”: Depardieu’s defense against the paparazzi’s accusations

Not a punch but a “fall”: Depardieu’s defense against the paparazzi’s accusations The electric Renault 5 already has a price in Spain

The electric Renault 5 already has a price in Spain MT Helmets Group renews its leadership: Javier Tomás, president, and Iván Abad, new CEO

MT Helmets Group renews its leadership: Javier Tomás, president, and Iván Abad, new CEO MG, the brand that stands up to the "excessive price" of cars, launches the MG3 Hybrid in Spain

MG, the brand that stands up to the "excessive price" of cars, launches the MG3 Hybrid in Spain Albert Rivera's new business bet: a private club in Madrid with Latin American capital

Albert Rivera's new business bet: a private club in Madrid with Latin American capital The home mortgage firm rises 3.8% in February and the average interest moderates to 3.33%

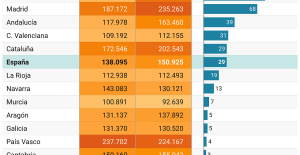

The home mortgage firm rises 3.8% in February and the average interest moderates to 3.33% This is how housing prices have changed in Spain in the last decade

This is how housing prices have changed in Spain in the last decade The home mortgage firm drops 10% in January and interest soars to 3.46%

The home mortgage firm drops 10% in January and interest soars to 3.46% The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella

The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella At the Lutte Ouvrière festival, the dreams of Trotskyist revolution collide with the reality of the European elections

At the Lutte Ouvrière festival, the dreams of Trotskyist revolution collide with the reality of the European elections “Such a dear friend on whom I could always count”: the political class pays tribute to the former mayor of Marseille, Jean-Claude Gaudin

“Such a dear friend on whom I could always count”: the political class pays tribute to the former mayor of Marseille, Jean-Claude Gaudin New Caledonia: Valls pleads for a “global agreement”, Knafo judges that “France must stick to its positions”

New Caledonia: Valls pleads for a “global agreement”, Knafo judges that “France must stick to its positions” New Caledonia: Braun-Pivet wants a postponement of the Congress

New Caledonia: Braun-Pivet wants a postponement of the Congress These French cities that will boycott the World Cup in Qatar

These French cities that will boycott the World Cup in Qatar Football: coach accused of racist insult resigns in Uruguay

Football: coach accused of racist insult resigns in Uruguay Europa League: Atalanta wins the title and ends Bayer Leverkusen’s invincibility

Europa League: Atalanta wins the title and ends Bayer Leverkusen’s invincibility Athletics: Maraval, Gilavert and Miellet achieve the Olympic minimums in Marseille

Athletics: Maraval, Gilavert and Miellet achieve the Olympic minimums in Marseille Mercato: Bayern Munich would have completed the arrival of Vincent Kompany as coach

Mercato: Bayern Munich would have completed the arrival of Vincent Kompany as coach