Originally, it was just a trivial lawsuit between an individual and an airline, accused of being responsible for injuries he says he suffered. But as the New York Times reveals, the airline's lawyers were taken aback by the brief sent by the plaintiff's lawyers: among the cases cited as case law, to support their request, the latter cited several cases which did not simply never existed.

The New York judge in charge of the case, P. Kevin Castel, then wrote to the plaintiff's lawyers to ask them for explanations: "six of the judgments cited refer to false court decisions and mention false citations", he observes. .

Implicated, the law firm Levidow

Schwartz, who expressed "tremendous regret" to the court when he realized his mistake, explained that he had never used ChatGPT before, and was unaware that some of the answers provided by the algorithm were made up, and therefore false. However, ChatGPT warns its users that it sometimes risks “providing incorrect information”.

The lawyer provided the court with screenshots of his ChatGPT interactions, showing that the chatbot had confirmed to him that one of the whimsical stops had indeed existed. When the lawyer asked him what his sources were, the artificial intelligence cited LexisNexis and Westlaw – two databases referencing court decisions. However, when we enter “Varghese v. China Southern Airlines Co Ltd” (the name of one of the stops cited in the brief) in the LexisNexis search engine, no results are found.

The two lawyers, Steven A. Scwartz and Peter LoDuca, are summoned to a hearing on June 8, with a view to possible disciplinary proceedings against them. Schwartz promised the court that he would no longer search ChatGPT without then verifying for himself the reality of the judgments proposed by the artificial intelligence.

United States: divided on the question of presidential immunity, the Supreme Court offers respite to Trump

United States: divided on the question of presidential immunity, the Supreme Court offers respite to Trump Maurizio Molinari: “the Scurati affair, a European injury”

Maurizio Molinari: “the Scurati affair, a European injury” Hamas-Israel war: US begins construction of pier in Gaza

Hamas-Israel war: US begins construction of pier in Gaza Israel prepares to attack Rafah

Israel prepares to attack Rafah First three cases of “native” cholera confirmed in Mayotte

First three cases of “native” cholera confirmed in Mayotte Meningitis: compulsory vaccination for babies will be extended in 2025

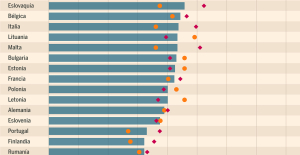

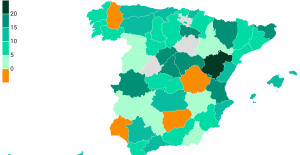

Meningitis: compulsory vaccination for babies will be extended in 2025 Spain is the country in the European Union with the most overqualified workers for their jobs

Spain is the country in the European Union with the most overqualified workers for their jobs Parvovirus alert, the “fifth disease” of children which has already caused the death of five babies in 2024

Parvovirus alert, the “fifth disease” of children which has already caused the death of five babies in 2024 Falling wings of the Moulin Rouge: who will pay for the repairs?

Falling wings of the Moulin Rouge: who will pay for the repairs? “You don’t sell a company like that”: Roland Lescure “annoyed” by the prospect of a sale of Biogaran

“You don’t sell a company like that”: Roland Lescure “annoyed” by the prospect of a sale of Biogaran Insults, threats of suicide, violence... Attacks by France Travail agents will continue to soar in 2023

Insults, threats of suicide, violence... Attacks by France Travail agents will continue to soar in 2023 TotalEnergies boss plans primary listing in New York

TotalEnergies boss plans primary listing in New York La Pléiade arrives... in Pléiade

La Pléiade arrives... in Pléiade In Japan, an animation studio bets on its creators suffering from autism spectrum disorders

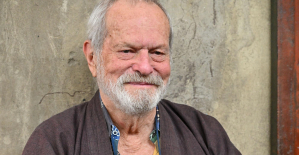

In Japan, an animation studio bets on its creators suffering from autism spectrum disorders Terry Gilliam, hero of the Annecy Festival, with Vice-Versa 2 and Garfield

Terry Gilliam, hero of the Annecy Festival, with Vice-Versa 2 and Garfield François Hollande, Stéphane Bern and Amélie Nothomb, heroes of one evening on the beach of the Cannes Film Festival

François Hollande, Stéphane Bern and Amélie Nothomb, heroes of one evening on the beach of the Cannes Film Festival Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV

Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV Tesla launches a new Model Y with 600 km of autonomy at a "more accessible price"

Tesla launches a new Model Y with 600 km of autonomy at a "more accessible price" The 10 best-selling cars in March 2024 in Spain: sales fall due to Easter

The 10 best-selling cars in March 2024 in Spain: sales fall due to Easter A private jet company buys more than 100 flying cars

A private jet company buys more than 100 flying cars This is how housing prices have changed in Spain in the last decade

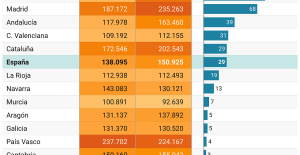

This is how housing prices have changed in Spain in the last decade The home mortgage firm drops 10% in January and interest soars to 3.46%

The home mortgage firm drops 10% in January and interest soars to 3.46% The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella

The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella Rental prices grow by 7.3% in February: where does it go up and where does it go down?

Rental prices grow by 7.3% in February: where does it go up and where does it go down? Even on a mission for NATO, the Charles-de-Gaulle remains under French control, Lecornu responds to Mélenchon

Even on a mission for NATO, the Charles-de-Gaulle remains under French control, Lecornu responds to Mélenchon “Deadly Europe”, “economic decline”, immigration… What to remember from Emmanuel Macron’s speech at the Sorbonne

“Deadly Europe”, “economic decline”, immigration… What to remember from Emmanuel Macron’s speech at the Sorbonne Sale of Biogaran: The Republicans write to Emmanuel Macron

Sale of Biogaran: The Republicans write to Emmanuel Macron Europeans: “All those who claim that we don’t need Europe are liars”, criticizes Bayrou

Europeans: “All those who claim that we don’t need Europe are liars”, criticizes Bayrou These French cities that will boycott the World Cup in Qatar

These French cities that will boycott the World Cup in Qatar Medicine, family of athletes, New Zealand…, discovering Manae Feleu, the captain of the Bleues

Medicine, family of athletes, New Zealand…, discovering Manae Feleu, the captain of the Bleues Football: OM wants to extend Leonardo Balerdi

Football: OM wants to extend Leonardo Balerdi Six Nations F: France-England shatters the attendance record for women’s rugby in France

Six Nations F: France-England shatters the attendance record for women’s rugby in France Judo: eliminated in the 2nd round of the European Championships, Alpha Djalo in full doubt

Judo: eliminated in the 2nd round of the European Championships, Alpha Djalo in full doubt