In 2014, Amazon has developed an artificial intelligence of recruitment he learned that the men were to be preferred, and began to discriminate against women. A year later a Google user Photos realized that the program labelled their friends to black people as gorillas. In 2018 it was discovered that an algorithm that analyzed the possibility of a relapse of a million convicts in the united STATES failed as much as any person without special knowledge, judicial or forensic science. Decisions that were previously taken by humans today are taken by systems of artificial intelligence. Some relating to the procurement of persons, the granting of credit, medical diagnoses or even court rulings. But the use of such systems carries a risk, as the data with which the algorithms are trained are conditioned by our knowledge and prejudices.

“The data are a reflection of the reality. If reality has a bias, the data also”, explains Richard Benjamins, ambassador of big data and artificial intelligence in the Phone, to THE COUNTRY. To prevent an algorithm to discriminate against certain groups, he argues, it is necessary to verify that the training data do not contain any bias, and during the testing of the algorithm to analyze the ratio of false positives and negatives. “It's much more serious an algorithm that discriminates in a manner not desired in the domains of legal, loan, or admission to the education in domains such as recommendation of movies or advertising,” says Benjamins.

Isabel Fernández, managing director of intelligence applied to Accenture, he gives as an example the granting of mortgages of automatic: “let's Imagine that in the past the majority of the applicants were men. And to the few women that were given a mortgage spent a few criteria so demanding that all comply with the payment commitment. If we use these data without more, the system would conclude that today, women are better payers than men, which is only a reflection of a prejudice of the past”.

women, however, are in many cases affected by these biases. “The algorithms usually are developed because mostly white men between 25 and 50 years so have decided during a meeting. On that basis, it is difficult to reach the opinion or perception of minority groups or of the other 50% of the population are women”, explains Nerea Luis Mingueza. This researcher in robotics and artificial intelligence at the University Carlos III ensures that the groups under-represented always will be most affected by the technological products: “For example, the female voices or children fail more in the speech-recognition systems”.

“The data are a reflection of the reality. If reality has a bias, the data also”

minorities are more likely to be affected by these biases as a matter of statistics, according to Jose María Lucia, partner in charge of the centre of artificial intelligence and data analysis from EY Wavespace: “The number of cases available for training will be less”. “In addition, all those groups that have suffered discrimination in the past of any type may be susceptible, as with the use of historical data we may be including, without realizing it, such a bias in the training,” he explains.

BetmatikThis is the case of the black population in the united STATES, according to points the senior manager at Accenture Juan Alonso: “It has been proved that faced with the same kind of lack as smoking a joint in public or the possession of small amounts of marijuana, a white person will not stop but someone of color”. Therefore, he argues that there is a higher percentage of black people in the database and an algorithm trained with this information would have a racial bias.

Sources of Google explained that it is essential to “be very careful” in granting power to an artificial intelligence system to take any decision on his own: “The artificial intelligence produces responses based on existing data, so that humans must recognize that not necessarily give flawless results”. Therefore, the company bet that in the majority of applications the final decision is taken by a person.

The black boxThe machines end up being in many cases a black box full of secrets even to their own developers, they are unable to understand which path has followed the model to arrive at a specific conclusion. Alonso argues that “normally when you judge you, give you a explanation in a judgment”: “But the problem is that this type of algorithms are opaque. You face a kind of oracle that is going to give a verdict”.

“people have a right to ask how an intelligent system suggests certain decisions and not others, and companies have the duty to help people understand the process of decision - ”

“Imagine that you are going to an outdoor festival and when you come to the first row, those responsible for security os stack without give an explanation. You are going to feel indignant. But if I explain that the first row is reserved for people in a wheelchair, you're going to go back but you're not going to get angry. The same thing happens with these algorithms, if we do not know what is going on it can produce a feeling of dissatisfaction,” explains Alonso.

To end this dilemma, researchers in artificial intelligence claimed to be the transparency and explanation of the training model. Large technology companies such as Microsoft defend several principles to make responsible use of artificial intelligence and driving initiatives to try to open the black box of algorithms and explain the why of their decisions.

Telefonica are hosting a challenge in the area of LUCA —its a unit of data— with the aim of creating new tools to detect unwanted bias in the data. Accenture has developed AI Fairness and IBM has also developed its own tool that detects bias and explains how the artificial intelligence takes certain decisions. To Francesca Rossi, the director of ethics in artificial intelligence from IBM, the key is that the systems of artificial intelligence are transparent and reliable: “The people have a right to ask how an intelligent system suggests certain decisions and not others, and companies have the duty to help people understand the decision-making process”.

Sydney: Assyrian bishop stabbed, conservative TikToker outspoken on Islam

Sydney: Assyrian bishop stabbed, conservative TikToker outspoken on Islam Torrential rains in Dubai: “The event is so intense that we cannot find analogues in our databases”

Torrential rains in Dubai: “The event is so intense that we cannot find analogues in our databases” Rishi Sunak wants a tobacco-free UK

Rishi Sunak wants a tobacco-free UK In Africa, the number of millionaires will boom over the next ten years

In Africa, the number of millionaires will boom over the next ten years Can relaxation, sophrology and meditation help with insomnia?

Can relaxation, sophrology and meditation help with insomnia? WHO concerned about spread of H5N1 avian flu to new species, including humans

WHO concerned about spread of H5N1 avian flu to new species, including humans New generation mosquito nets prove much more effective against malaria

New generation mosquito nets prove much more effective against malaria Covid-19: everything you need to know about the new vaccination campaign which is starting

Covid-19: everything you need to know about the new vaccination campaign which is starting For the Olympics, SNCF is developing an instant translation application

For the Olympics, SNCF is developing an instant translation application La Poste deploys mobile post office trucks in 5 rural departments

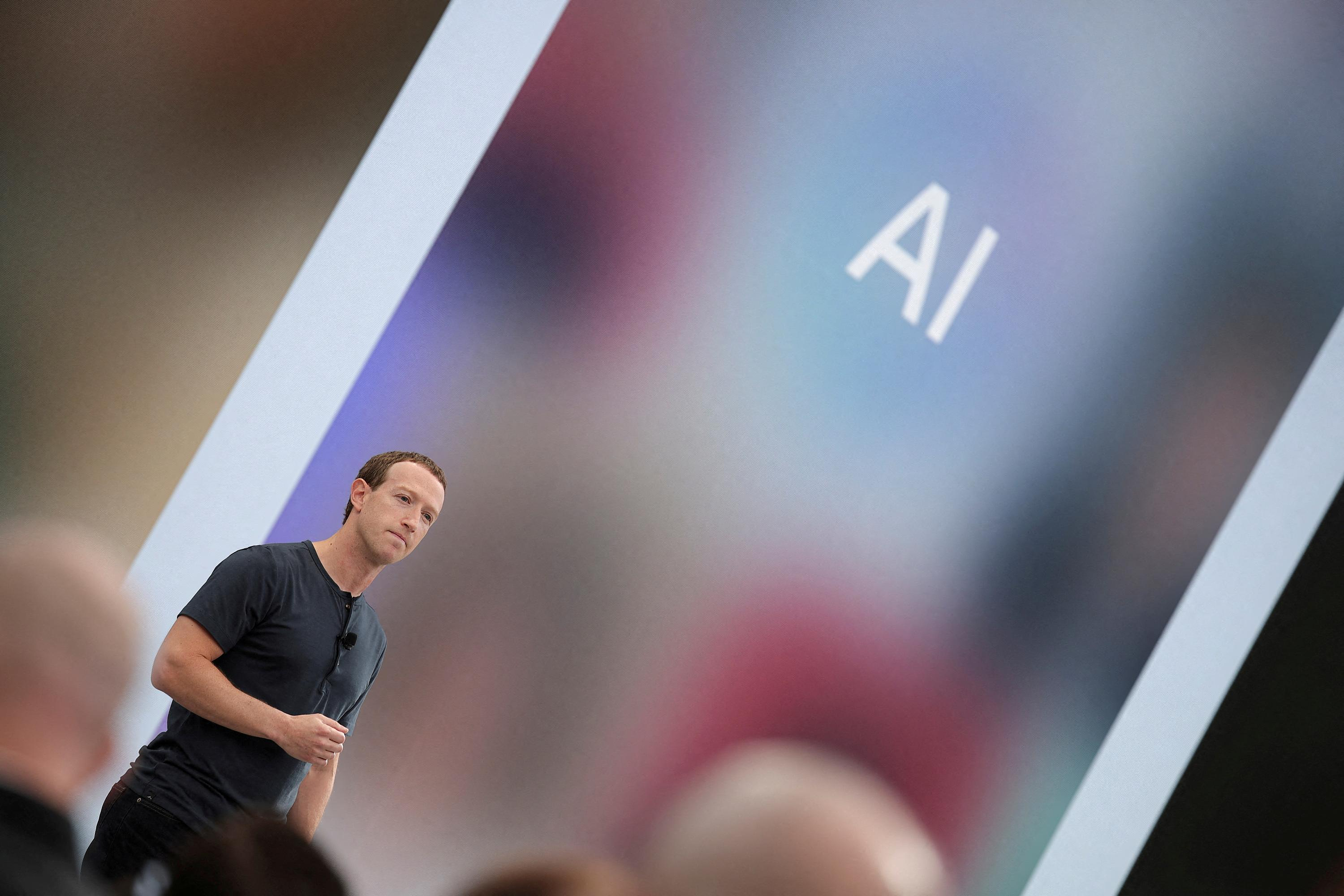

La Poste deploys mobile post office trucks in 5 rural departments Meta accelerates into generative artificial intelligence with Llama 3

Meta accelerates into generative artificial intelligence with Llama 3 In China, Apple forced to withdraw WhatsApp and Threads applications at the request of the authorities

In China, Apple forced to withdraw WhatsApp and Threads applications at the request of the authorities The main facade of the old Copenhagen Stock Exchange collapsed, two days after the fire started

The main facade of the old Copenhagen Stock Exchange collapsed, two days after the fire started Alain Delon decorated by Ukraine for his support in the conflict against Russia

Alain Delon decorated by Ukraine for his support in the conflict against Russia Who’s Who launches the first edition of its literary prize

Who’s Who launches the first edition of its literary prize Sylvain Amic appointed to the Musée d’Orsay to replace Christophe Leribault

Sylvain Amic appointed to the Musée d’Orsay to replace Christophe Leribault Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV

Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV Tesla launches a new Model Y with 600 km of autonomy at a "more accessible price"

Tesla launches a new Model Y with 600 km of autonomy at a "more accessible price" The 10 best-selling cars in March 2024 in Spain: sales fall due to Easter

The 10 best-selling cars in March 2024 in Spain: sales fall due to Easter A private jet company buys more than 100 flying cars

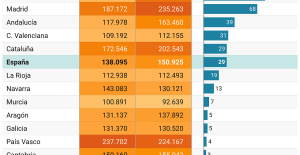

A private jet company buys more than 100 flying cars This is how housing prices have changed in Spain in the last decade

This is how housing prices have changed in Spain in the last decade The home mortgage firm drops 10% in January and interest soars to 3.46%

The home mortgage firm drops 10% in January and interest soars to 3.46% The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella

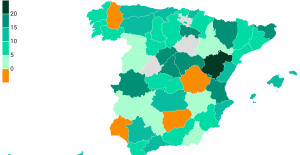

The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella Rental prices grow by 7.3% in February: where does it go up and where does it go down?

Rental prices grow by 7.3% in February: where does it go up and where does it go down? With the promise of a “real burst of authority”, Gabriel Attal provokes the ire of the opposition

With the promise of a “real burst of authority”, Gabriel Attal provokes the ire of the opposition Europeans: the schedule of debates to follow between now and June 9

Europeans: the schedule of debates to follow between now and June 9 Europeans: “In France, there is a left and there is a right,” assures Bellamy

Europeans: “In France, there is a left and there is a right,” assures Bellamy During the night of the economy, the right points out the budgetary flaws of the macronie

During the night of the economy, the right points out the budgetary flaws of the macronie These French cities that will boycott the World Cup in Qatar

These French cities that will boycott the World Cup in Qatar Europa League: “We dream of everything,” says Jean-Louis Gasset

Europa League: “We dream of everything,” says Jean-Louis Gasset Europa League: “Trouble playing our football,” admits Benfica coach

Europa League: “Trouble playing our football,” admits Benfica coach Europa League Conference: “Martinez eats all your deaths”, Obraniak’s breakdown after the elimination of Lille

Europa League Conference: “Martinez eats all your deaths”, Obraniak’s breakdown after the elimination of Lille Premier League: “It’s a team that is transforming into the Champions League”, Casemiro returned to Real’s qualification

Premier League: “It’s a team that is transforming into the Champions League”, Casemiro returned to Real’s qualification