among all the revolutions that come to shape our future, the one related to the artificial intelligence (AI) is probably the greatest impact you can have. But also the more uncertainty it generates. The news about the progress in this branch of science seem to give a lime and another of sand. One day we are informed about its use to prevent the illegal hunting or to detect emerging infections. The next day, we are reminded of the risks of a bad use of the technology.

these newsgroups are added to the open letters and communiqués of the personalities in disagreement as Elon Musk or Bill Gates. Warned of the danger of employing AI in the arms industry. And there are those skeptical of the discipline, who believe that you have to be cautious with these visions overly apocalyptic or enthusiastic. With such a quantity of views, how they affected the perception that society has of this area?

MORE INFORMATION

Experts in artificial intelligence call for the creation of an agency in Europe to monitor your development computer select your resume and know when you lieElisabet Roselló is of the opinion that all these narratives are penetrating the citizens, but a large part of society unknown almost completely any advancement in artificial intelligence. This consultant in strategic innovation and research of social trends, he believes that “the population can recall some anecdotal reports that have appeared in the news of the tele, but neither will know of the existence of these open letters”.

For Roselló, disputes arising out of the use of the AI “seem to that a few years ago were born with the genetic research. There were movements opposing as the religious, but the research has continued forward, always within a regulatory framework that, yes, you should be defined in detail for the AI”. A few frames which in turn should be rewritten, because, according to the founder of Postfuturear, “do not work as designed two centuries ago. Non-state actors such as Google or IBM have a lot of power and may go beyond these regulations.”

Big companies that, along with the universities, are creating many jobs related to artificial intelligence. The research in this area is being strongly backed by businesses and governments. According to dr. Francisco R. Villatoro, professor of the department of Languages and Computer Science in the area of Computer Science and Artificial Intelligence, University of Malaga, there are “no cuts on research in AI, on the contrary, there are several countries who each day gamble more strong by these technologies. And many technology companies”. If there is fear for the advancement of this technology, does not seem to be Casino Siteleri perceived in a large part of public and private investment. But Google did not renew the contract that united them to the Pentagon-level artificial intelligence. How a movement is carried out to maintain a corporate image away from the area weapons?

“Look to see what ideas so far-fetched and unthinkable a decade ago as the universal income are already being put on the table today

In the ICRAC, the international committee for the control of robotic arms, supported the initiative of Google with another open letter addressed to the leaders of the company. In it, 1179 researchers and scientists unite in solidarity with the more than 3000 Google employees to leave to develop military technology and store personal information for military purposes. Peter Asaro, one of the spokespersons of the charter of the ICRAC, believes that this rise of open letters against the use weapons of the AI comes from the fact that “the population is discovering the negative effects of these technologies after many years of optimism undisputed”. According to Asaro, “also the workers of the technological realize the ways of being accomplices and are beginning to organize to shape the morals of their companies.”

The future

On the future of research in AI, Asaro is blunt: “stop the research on autonomous weapons and to establish regulations on their use. There may be many futures for the research in AI, and the autonomy and choice of goals are just two of them.” That would be, according to the researcher, a great step to transmit the whole of society that there are no dangers in the evolution of the AI. “We also need greater transparency and accountability in the use of AI on the part of large companies,” he concludes, “where to stop, clear the assumptions from which they build, or the uses that are going to give.”

all in all, there is a fear to the IA between the bulk of society, this seems to be more related to practical aspects of day-to-day. As the future of work. In that sense, there are two relevant agents in the way in which to communicate the effect of the IA: the World Economic Forum and the Singularity University. “Are entities that promote the economic evolution,” says Rossello, “but they can sin in a certain ambiguity when it comes to communicating. Sometimes resort to a show of terror, and this promotes a widespread fear of losing the job among the people least prepared.”

A fear of that series, and films do not cease to convey through fiction, speculative or science fiction. Stories that perhaps we should pay as much attention as the news that come to us from research centres or experts in the field. “Look to see what ideas so far-fetched and unthinkable a decade ago as the universal income are already being put on the table today”, says Guillem López, a novelist of science fiction. “The idea of a society in which there is no work for more than half of the population starts to become palpable. However, maybe you should consider it is the end of wage labour and private property as we have known until now. This is where the fiction portrays the future not only plausible but probable and, also, necessary”.

After 13 years of mission and seven successive leaders, the UN at an impasse in Libya

After 13 years of mission and seven successive leaders, the UN at an impasse in Libya Germany: search of AfD headquarters in Lower Saxony, amid accusations of embezzlement

Germany: search of AfD headquarters in Lower Saxony, amid accusations of embezzlement Faced with Iran, Israel plays appeasement and continues its shadow war

Faced with Iran, Israel plays appeasement and continues its shadow war Iran-Israel conflict: what we know about the events of the night after the explosions in Isfahan

Iran-Israel conflict: what we know about the events of the night after the explosions in Isfahan “Even morphine doesn’t work”: Léane, 17, victim of the adverse effects of an antibiotic

“Even morphine doesn’t work”: Léane, 17, victim of the adverse effects of an antibiotic Sánchez condemns Iran's attack on Israel and calls for "containment" to avoid an escalation

Sánchez condemns Iran's attack on Israel and calls for "containment" to avoid an escalation China's GDP grows 5.3% in the first quarter, more than expected

China's GDP grows 5.3% in the first quarter, more than expected Alert on the return of whooping cough, a dangerous respiratory infection for babies

Alert on the return of whooping cough, a dangerous respiratory infection for babies Vacation departures and returns: with the first crossovers, heavy traffic is expected this weekend

Vacation departures and returns: with the first crossovers, heavy traffic is expected this weekend “Têtu”, “Ideat”, “The Good Life”… The magazines of the I/O Media group resold to several buyers

“Têtu”, “Ideat”, “The Good Life”… The magazines of the I/O Media group resold to several buyers The A13 motorway closed in both directions for an “indefinite period” between Paris and Normandy

The A13 motorway closed in both directions for an “indefinite period” between Paris and Normandy The commitment to reduce taxes of 2 billion euros for households “will be kept”, assures Gabriel Attal

The commitment to reduce taxes of 2 billion euros for households “will be kept”, assures Gabriel Attal The exclusive Vespa that pays tribute to 140 years of Piaggio

The exclusive Vespa that pays tribute to 140 years of Piaggio Kingdom of the great maxi scooters: few and Kymco wants the crown of the Yamaha TMax

Kingdom of the great maxi scooters: few and Kymco wants the crown of the Yamaha TMax A complaint filed against Kanye West, accused of hitting an individual who had just attacked his wife

A complaint filed against Kanye West, accused of hitting an individual who had just attacked his wife In Béarn, a call for donations to renovate the house of Henri IV's mother

In Béarn, a call for donations to renovate the house of Henri IV's mother Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV

Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV Tesla launches a new Model Y with 600 km of autonomy at a "more accessible price"

Tesla launches a new Model Y with 600 km of autonomy at a "more accessible price" The 10 best-selling cars in March 2024 in Spain: sales fall due to Easter

The 10 best-selling cars in March 2024 in Spain: sales fall due to Easter A private jet company buys more than 100 flying cars

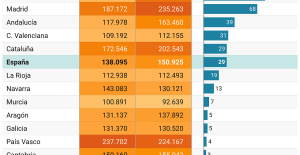

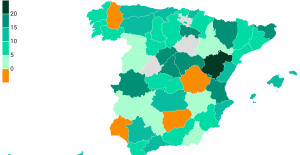

A private jet company buys more than 100 flying cars This is how housing prices have changed in Spain in the last decade

This is how housing prices have changed in Spain in the last decade The home mortgage firm drops 10% in January and interest soars to 3.46%

The home mortgage firm drops 10% in January and interest soars to 3.46% The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella

The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella Rental prices grow by 7.3% in February: where does it go up and where does it go down?

Rental prices grow by 7.3% in February: where does it go up and where does it go down? With the promise of a “real burst of authority”, Gabriel Attal provokes the ire of the opposition

With the promise of a “real burst of authority”, Gabriel Attal provokes the ire of the opposition Europeans: the schedule of debates to follow between now and June 9

Europeans: the schedule of debates to follow between now and June 9 Europeans: “In France, there is a left and there is a right,” assures Bellamy

Europeans: “In France, there is a left and there is a right,” assures Bellamy During the night of the economy, the right points out the budgetary flaws of the macronie

During the night of the economy, the right points out the budgetary flaws of the macronie These French cities that will boycott the World Cup in Qatar

These French cities that will boycott the World Cup in Qatar Formula 1: Verstappen wins the sprint in China, Hamilton 2nd

Formula 1: Verstappen wins the sprint in China, Hamilton 2nd Rally: Neuville and Evans neck and neck after the first day in Croatia

Rally: Neuville and Evans neck and neck after the first day in Croatia Gymnastics: after Rio and Tokyo, Frenchman Samir Aït Saïd qualified for the Paris 2024 Olympics

Gymnastics: after Rio and Tokyo, Frenchman Samir Aït Saïd qualified for the Paris 2024 Olympics Top 14: in the fight for maintenance, Perpignan has the wind at its back

Top 14: in the fight for maintenance, Perpignan has the wind at its back