About a year ago, the European Commission published a proposal for a regulation on Artificial Intelligence.

The proposal is part of an apparent race by the European Union to regulate the digital and technological environment in response to many reasons, among which we can include a global context of political and social upheavals that, correctly or incorrectly, have been attributed - among other things - to the digitization of discourse and social life.

This great regulatory effort has generated important proposals such as the Digital Services Act (DSA) or the Digital Markets Act (DMA), but the nature of the Artificial Intelligence Regulation seems different. This is so because, while the other proposals (such as the DSA or DMA) essentially attempt to regulate the business or management of certain technologies or digital environments, such as social networks, for example, the Artificial Intelligence Regulation proposal goes further and it effectively prohibits or restricts the very development, marketing and use of certain technologies. In other words: the proposal intends to regulate the technology itself, and not only the result of its use.

Here is the first problem of the proposal. Regulating technology is a daunting, difficult and often useless task, especially when we talk about products that, as happens with Artificial Intelligence, in many cases are just software or lines of algorithms. Add to this the characteristic opacity of said algorithms, the difficulty of clearly identifying failures in their use, or those responsible for damages caused by incorrect or improper use, and what we will have is a highly regulated and bureaucratized environment, but one that is not necessarily will lead to effective social protection.

Furthermore, the European Union's rush to regulate vast aspects of digital life appears to be motivated by fear. Fear of technology itself, fear of being left behind in the digital economy, and fear of regulatory and political fragmentation that harms the very essence of the common European market and of the very institution of the European Union, which would be supplanted by the immense power and size of so-called big tech.

Having fallen behind the US and China in the technology race, Europe is not the birthplace of any of the digital giants and, to some extent, finds itself hostage to a digital culture formatted under alien values and legal contexts but which However, they project and exercise enormous power both over their economy and at a social level. Bearing in mind this enormous concentration of economic and political power of the big techs - and the fact that, by the very nature of their activities, this concentration of power tends to be ever greater - the European Union has been forced to react. And it has reacted in the way it knows how: regulating by right so as not to be, itself, regulated de facto.

But fear is not a good legislative adviser and, in general, leads to the hasty creation of regulations that are counterproductive or very difficult to apply, which, by generating a false sense of security, can create an even more vulnerable society, quite the opposite of what is intended.

This does not mean that we should not seek some form of control over the use of new technologies; If there is one thing that the turbulence of recent years has taught us, it is that society needs mechanisms to deal with the huge power imbalance between technology companies and citizens. The European proposal is a first effort, but the technological changes that we will face in the coming years will require a much broader and more creative spectrum of action, beyond mere generic restrictions in bureaucratic regulations.

Germany: Man armed with machete enters university library and threatens staff

Germany: Man armed with machete enters university library and threatens staff His body naturally produces alcohol, he is acquitted after a drunk driving conviction

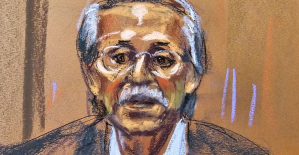

His body naturally produces alcohol, he is acquitted after a drunk driving conviction Who is David Pecker, the first key witness in Donald Trump's trial?

Who is David Pecker, the first key witness in Donald Trump's trial? What does the law on the expulsion of migrants to Rwanda adopted by the British Parliament contain?

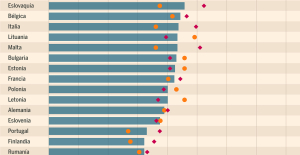

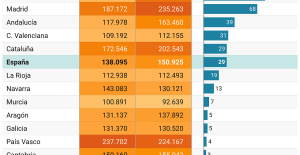

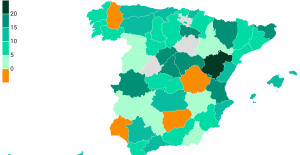

What does the law on the expulsion of migrants to Rwanda adopted by the British Parliament contain? Spain is the country in the European Union with the most overqualified workers for their jobs

Spain is the country in the European Union with the most overqualified workers for their jobs Parvovirus alert, the “fifth disease” of children which has already caused the death of five babies in 2024

Parvovirus alert, the “fifth disease” of children which has already caused the death of five babies in 2024 Colorectal cancer: what to watch out for in those under 50

Colorectal cancer: what to watch out for in those under 50 H5N1 virus: traces detected in pasteurized milk in the United States

H5N1 virus: traces detected in pasteurized milk in the United States Insurance: SFAM, subsidiary of Indexia, placed in compulsory liquidation

Insurance: SFAM, subsidiary of Indexia, placed in compulsory liquidation Under pressure from Brussels, TikTok deactivates the controversial mechanisms of its TikTok Lite application

Under pressure from Brussels, TikTok deactivates the controversial mechanisms of its TikTok Lite application “I can’t help but panic”: these passengers worried about incidents on Boeing

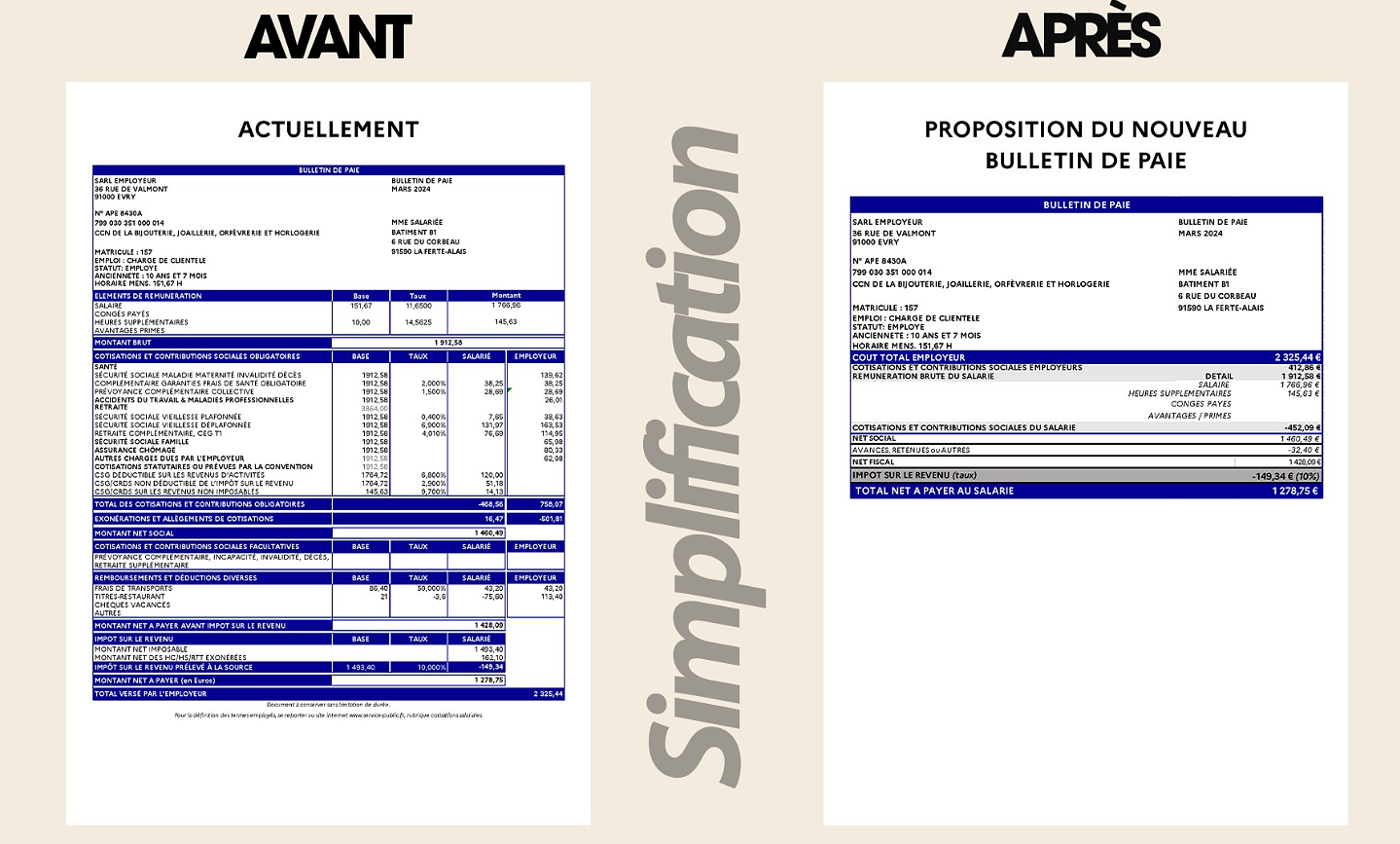

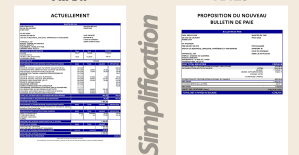

“I can’t help but panic”: these passengers worried about incidents on Boeing “I’m interested in knowing where the money that the State takes from me goes”: Bruno Le Maire’s strange pay slip sparks controversy

“I’m interested in knowing where the money that the State takes from me goes”: Bruno Le Maire’s strange pay slip sparks controversy 25 years later, the actors of Blair Witch Project are still demanding money to match the film's record profits

25 years later, the actors of Blair Witch Project are still demanding money to match the film's record profits At La Scala, Mathilde Charbonneaux is Madame M., Jacqueline Maillan

At La Scala, Mathilde Charbonneaux is Madame M., Jacqueline Maillan Deprived of Hollywood and Western music, Russia gives in to the charms of K-pop and manga

Deprived of Hollywood and Western music, Russia gives in to the charms of K-pop and manga Exhibition: Toni Grand, the incredible odyssey of a sculptural thinker

Exhibition: Toni Grand, the incredible odyssey of a sculptural thinker Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV

Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV Tesla launches a new Model Y with 600 km of autonomy at a "more accessible price"

Tesla launches a new Model Y with 600 km of autonomy at a "more accessible price" The 10 best-selling cars in March 2024 in Spain: sales fall due to Easter

The 10 best-selling cars in March 2024 in Spain: sales fall due to Easter A private jet company buys more than 100 flying cars

A private jet company buys more than 100 flying cars This is how housing prices have changed in Spain in the last decade

This is how housing prices have changed in Spain in the last decade The home mortgage firm drops 10% in January and interest soars to 3.46%

The home mortgage firm drops 10% in January and interest soars to 3.46% The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella

The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella Rental prices grow by 7.3% in February: where does it go up and where does it go down?

Rental prices grow by 7.3% in February: where does it go up and where does it go down? Sale of Biogaran: The Republicans write to Emmanuel Macron

Sale of Biogaran: The Republicans write to Emmanuel Macron Europeans: “All those who claim that we don’t need Europe are liars”, criticizes Bayrou

Europeans: “All those who claim that we don’t need Europe are liars”, criticizes Bayrou With the promise of a “real burst of authority”, Gabriel Attal provokes the ire of the opposition

With the promise of a “real burst of authority”, Gabriel Attal provokes the ire of the opposition Europeans: the schedule of debates to follow between now and June 9

Europeans: the schedule of debates to follow between now and June 9 These French cities that will boycott the World Cup in Qatar

These French cities that will boycott the World Cup in Qatar Hand: Montpellier crushes Kiel and continues to dream of the Champions League

Hand: Montpellier crushes Kiel and continues to dream of the Champions League OM-Nice: a spectacular derby, Niçois timid despite their numerical superiority...The tops and the flops

OM-Nice: a spectacular derby, Niçois timid despite their numerical superiority...The tops and the flops Tennis: 1000 matches and 10 notable encounters by Richard Gasquet

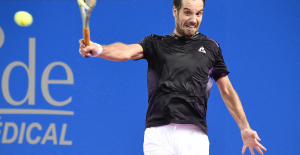

Tennis: 1000 matches and 10 notable encounters by Richard Gasquet Tennis: first victory of the season on clay for Osaka in Madrid

Tennis: first victory of the season on clay for Osaka in Madrid