What would you do? You can no longer brake your car and are forced to run over and presumably kill either an elderly woman or a young man. What if the lady has just recovered from a serious illness and finally wants to enjoy her life again, while the young man is a good-for-nothing who lives off other people? A decision is difficult or even impossible.

Self-driving vehicles have to deal with this problem. The development of autonomous driving is progressing steadily, and more and more cars are already equipped with assistance systems. Programmers develop algorithms to control the vehicles, but also to make decisions in critical situations. For example, the cars have to react to fog or when the brakes fail.

The fundamental moral problem that arises from this is often discussed in terms of the "trolley problem". The name goes back to a thought experiment: A train has gotten out of control and is about to roll over five people. The pointsman can deviate the course, but accepts the death of another person. Which action is ethically right? Applied to self-driving cars, the question arises as to how the algorithm decides when it only has to choose between bad alternatives.

The much-discussed trolley problem is philosophically interesting, but it is extremely rare in reality. Self-driving vehicles also prevent many accidents, for example because there are no drivers who have fallen asleep and they are not confused by blind spots.

The overall ethical balance of autonomous vehicles is therefore positive. On the other hand, the extremely rare trolley situations are of little relevance. The underlying philosophical problem is very old and fundamentally unsolvable without strong assumptions. A complicated algorithm that captures all possible situations that occur and is supposed to make a decision using weighted ethical factors is therefore not expedient.

A research group from the Technical University of Munich tried it anyway and published a new proposal for ethics in autonomous driving in the journal "Nature Machine Intelligence". The researchers defined five ethical rules for evaluating critical situations.

In this way, special protection is granted to those people who would be worst affected in the event of an accident. The scientists used these criteria to evaluate two thousand different situations. The weighting of ethical principles is particularly difficult here. By their criterion, the algorithm would prefer to endanger two strong men rather than an old lady.

This does not solve the problem of morally different assessments. In January 2017, the German ethics committee issued an ultimatum in a report: "...any qualification based on personal characteristics (such as age or gender) is strictly prohibited".

We make another suggestion. In the extreme situation described, the algorithm should decide at random which of the two bad alternatives to choose. In other words: the lot is drawn. Because the basic ethical problem cannot be solved, chance should decide.

Each alternative thus has the same chance of being chosen. The law of big numbers ensures true fairness. An equal distribution of risk is fairer than an algorithm based on questionable moral sensibilities. A random decision is also far less expensive and easy to implement.

At first glance, a random decision appears arbitrary and not very rational. However, historical experience shows that the random system has been very successful. In ancient Greece, political offices in Athens were filled by drawing lots from the citizens. The Republic of Venice and other thriving northern Italian cities have used this process successfully for centuries.

Like Athens before them, they were enormously successful politically, economically and culturally during this period. As with the trolley problem, from an ethical point of view, there is no single objective, correct decision. Random decisions work particularly well in this situation, since the decision-making process relies on randomness instead of human arbitrariness.

Iran-Israel conflict: what we know about the events of the night after the explosions in Isfahan

Iran-Israel conflict: what we know about the events of the night after the explosions in Isfahan Sydney: Assyrian bishop stabbed, conservative TikToker outspoken on Islam

Sydney: Assyrian bishop stabbed, conservative TikToker outspoken on Islam Torrential rains in Dubai: “The event is so intense that we cannot find analogues in our databases”

Torrential rains in Dubai: “The event is so intense that we cannot find analogues in our databases” Rishi Sunak wants a tobacco-free UK

Rishi Sunak wants a tobacco-free UK Alert on the return of whooping cough, a dangerous respiratory infection for babies

Alert on the return of whooping cough, a dangerous respiratory infection for babies Can relaxation, sophrology and meditation help with insomnia?

Can relaxation, sophrology and meditation help with insomnia? WHO concerned about spread of H5N1 avian flu to new species, including humans

WHO concerned about spread of H5N1 avian flu to new species, including humans New generation mosquito nets prove much more effective against malaria

New generation mosquito nets prove much more effective against malaria The A13 motorway closed in both directions for an “indefinite period” between Paris and Normandy

The A13 motorway closed in both directions for an “indefinite period” between Paris and Normandy The commitment to reduce taxes of 2 billion euros for households “will be kept”, assures Gabriel Attal

The commitment to reduce taxes of 2 billion euros for households “will be kept”, assures Gabriel Attal Unemployment insurance: Gabriel Attal leans more towards a tightening of affiliation conditions

Unemployment insurance: Gabriel Attal leans more towards a tightening of affiliation conditions “Shrinkflation”: soon posters on shelves to alert consumers

“Shrinkflation”: soon posters on shelves to alert consumers The restored first part of Abel Gance's Napoléon presented at Cannes Classics

The restored first part of Abel Gance's Napoléon presented at Cannes Classics Sting and Deep Purple once again on the bill at the next Montreux Jazz Festival

Sting and Deep Purple once again on the bill at the next Montreux Jazz Festival Rachida Dati: one hundred days of Culture on the credo of anti-elitism

Rachida Dati: one hundred days of Culture on the credo of anti-elitism The unbearable wait for Marlène Schiappa’s next masterpiece

The unbearable wait for Marlène Schiappa’s next masterpiece Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV

Skoda Kodiaq 2024: a 'beast' plug-in hybrid SUV Tesla launches a new Model Y with 600 km of autonomy at a "more accessible price"

Tesla launches a new Model Y with 600 km of autonomy at a "more accessible price" The 10 best-selling cars in March 2024 in Spain: sales fall due to Easter

The 10 best-selling cars in March 2024 in Spain: sales fall due to Easter A private jet company buys more than 100 flying cars

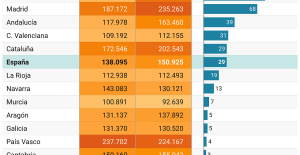

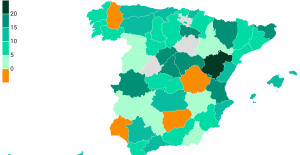

A private jet company buys more than 100 flying cars This is how housing prices have changed in Spain in the last decade

This is how housing prices have changed in Spain in the last decade The home mortgage firm drops 10% in January and interest soars to 3.46%

The home mortgage firm drops 10% in January and interest soars to 3.46% The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella

The jewel of the Rocío de Nagüeles urbanization: a dream villa in Marbella Rental prices grow by 7.3% in February: where does it go up and where does it go down?

Rental prices grow by 7.3% in February: where does it go up and where does it go down? With the promise of a “real burst of authority”, Gabriel Attal provokes the ire of the opposition

With the promise of a “real burst of authority”, Gabriel Attal provokes the ire of the opposition Europeans: the schedule of debates to follow between now and June 9

Europeans: the schedule of debates to follow between now and June 9 Europeans: “In France, there is a left and there is a right,” assures Bellamy

Europeans: “In France, there is a left and there is a right,” assures Bellamy During the night of the economy, the right points out the budgetary flaws of the macronie

During the night of the economy, the right points out the budgetary flaws of the macronie These French cities that will boycott the World Cup in Qatar

These French cities that will boycott the World Cup in Qatar Champions League: France out of the race for 5th qualifying place

Champions League: France out of the race for 5th qualifying place Ligue 1: at what time and on which channel to watch Nantes-Rennes?

Ligue 1: at what time and on which channel to watch Nantes-Rennes? Marseille-Benfica: 2.99 million viewers watching OM’s victory on M6

Marseille-Benfica: 2.99 million viewers watching OM’s victory on M6 Cycling: Cofidis continues its professional adventure until 2028

Cycling: Cofidis continues its professional adventure until 2028